People must retain control of autonomous vehicles

Driverless vehicles are being tested on public roads in a number of countries.Credit: Prostock/Getty

Last month, for the first time, a pedestrian was killed in an accident involving a self-driving car. A sports-utility vehicle controlled by an autonomous algorithm hit a woman who was crossing the road in Tempe, Arizona. The safety driver inside the vehicle was unable to prevent the crash.

Although such accidents are rare, their incidence could rise as more vehicles that are capable of driving without human intervention are tested on public roads. In the past year, several countries have passed laws to pave the way for such trials. For example, Singapore modified its Road Traffic Act to permit autonomous cars to drive in designated areas. The Swedish Transport Agency allowed driverless buses to run in northern Stockholm. In the United States, the House of Representatives passed the SELF DRIVE Act to harmonize laws across various states. Similar action is pending in the US Senate, where a vote to support the AV START Act would further liberalize trials of driverless vehicles.

Policymakers are enthusiastic about the potential of autonomous vehicles to reduce road congestion, air pollution and road-traffic accidents1,2. Cheap ride-hailing services could reduce the number of privately owned cars. Machine intelligence can make driving more fuel-efficient, cutting emissions. Autonomous cars could help to save the 1.25 million lives worldwide that are lost each year through crashes3, many of which are caused by human error.

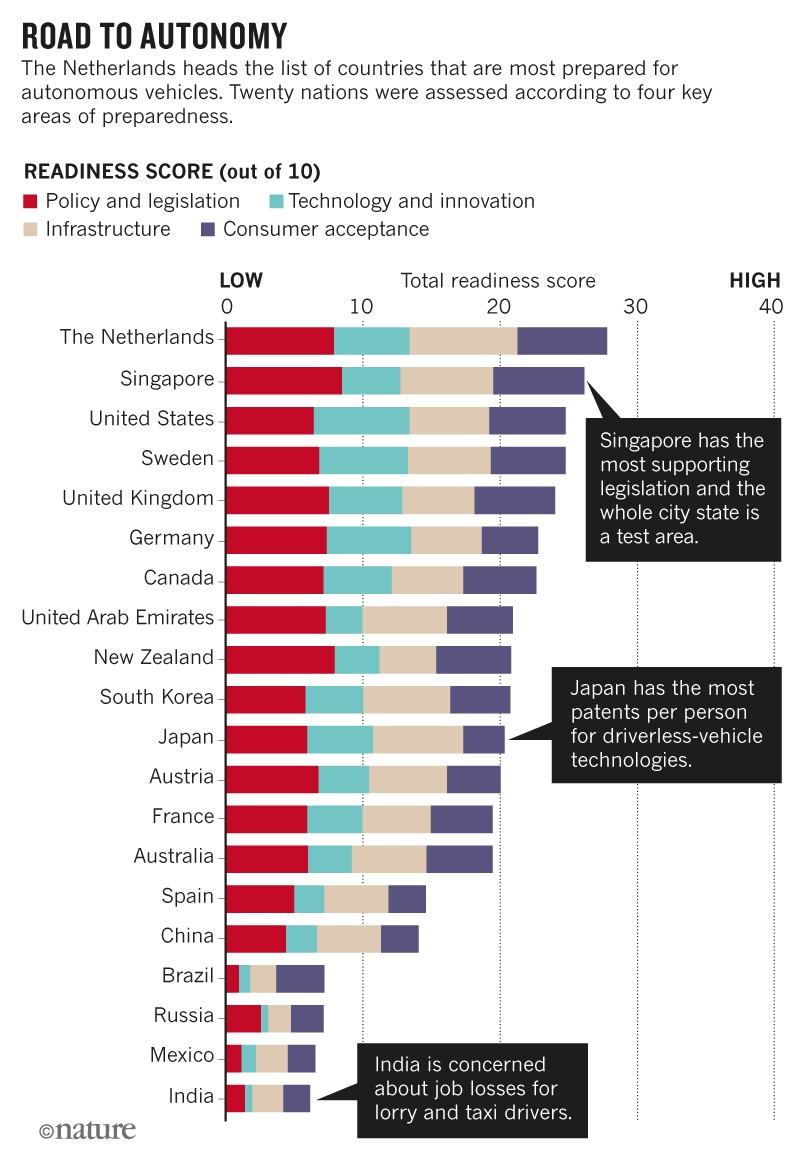

Governments want to pass laws to make this happen (see ‘Road to autonomy’). But they are doing so by temporarily freeing developers of self-driving cars from meeting certain transport safety rules. These rules include the requirement that a human operator be inside the vehicle, that vehicles have safety features such as a steering wheel, brakes and a mirror, and that the features are functional at all times. Some developers are maintaining these aspects, but they are not obliged to do so. There is no guarantee that autonomous vehicles will match the safety standards of current cars.

Source: Autonomous Vehicles Readiness Index (KPMG International, 2018)

Meanwhile, the wider policy implications are not being addressed1,2. Governments stand to lose billions of dollars in tax revenue as rates of car ownership drop among individuals. Millions of taxi, lorry and bus drivers will lose their jobs2. The machine-learning algorithms on which autonomous vehicles rely are far from developed enough to make choices that could mean life or death for pedestrians or drivers.

Policymakers need to work more closely with academics and manufacturers to design appropriate regulations. This is extremely challenging because the research cuts across many disciplines.

Here, we highlight two areas — liability and safety — that require urgent attention.

Liability

Like other producers, developers of autonomous vehicles are legally liable for damages that stem from the defective design, manufacture and marketing of their products. The potential liability risk is great for driverless cars because complex systems interact in ways that are unexpected.

Manufacturers want to minimize the number of liability claims made against them4. One way is to reduce the chance of their product being misused by educating consumers about how it works and alerting them to safety concerns. For example, drug developers provide information on dosages and side effects; electronics manufacturers issue instructions and warnings. Such guidance shapes the expectations of consumers and fosters satisfaction. Yet, much like smartphones, self-driving cars are underpinned by sophisticated technologies that are hard to explain or understand.

A safety driver sits behind the wheel during a test of a self-driving taxi in Yokohama, Japan.Credit: Kiyoshi Ota/Bloomberg/Getty

Instead, developers are designing such products to be easy to use5. People are more likely to buy a product that seems straightforward and with which they can soon do complicated things, increasing its utility. However, users are then less able to anticipate how the underlying systems work, or to recognize problems and fix them. For example, few drivers of computerized cars know how the engine is calibrated5. Similarly, a passenger in an autonomous vehicle will not know why it chooses to make a sharp turn into oncoming traffic or why it does not overtake a slow-moving vehicle.

Worse, deep-learning algorithms are inherently unpredictable. They are built on an opaque decision-making process that is shaped by previous experiences. Each car will be trained differently. No one — not even an algorithm’s designer — can know precisely how an autonomous car will behave under every circumstance.

No law specifies how much training is needed before a deep-learning car can be deemed safe, nor what that training should be. Cars from different manufacturers could react in contrasting ways in an emergency. One might swerve around an obstacle; another might slam on the brakes. Rare traffic events, such as a truck tipping over in the wind, are of particular concern and, at best, make it difficult to train driverless cars.

Advanced interfaces are needed that inform users why an autonomous vehicle is behaving as it does. Today’s dashboards convey information about a car’s speed and the amount of fuel that remains. Tomorrow’s displays must show the vehicle’s ‘intentions’ and the logic that governs them; for example, they might tell passengers that the car will not overtake the vehicle ahead because there is only a 10% likelihood of success. Little is known about the types of data that should be imparted and how people will interpret them.

Users often ignore information, even if it is presented clearly and the consequences could be a matter of life or death. For instance, almost 70% of airline passengers do not review safety cards before a flight6, despite being asked. Yet these cards convey important information, including how to put on an oxygen mask and open an emergency exit, in simple terms and on a single page.

Autonomous vehicles will need to communicate much more complicated information. Their sensors and algorithms must understand the behaviours of pedestrians, discriminate between styles of driving and adjust to changes in lighting. When they cannot, users must know how to respond.

Researching ways to present this information effectively is paramount, as are legislative efforts to ensure that users of autonomous vehicles are proficient in using the technology.

Safety

The safety and efficiency benefits of autonomous cars rely on computers making better, quicker decisions than people. Users input their desired destination and thereafter cede control to the computer. Full autonomy has — deliberately — not yet been adopted in transportation. People are still perceived as being more flexible, adaptable and creative than machines, and better able to respond to changing or unforeseen conditions7. Pilots are able, therefore, to wrest control from fly-by-wire technology when key computers fail.

The public is right to remain cautious about full automation. Manufacturers need to explain how a car would protect passengers should crucial systems fail. A driverless car must be able to stop safely if its hazard-avoidance algorithms malfunction, its cameras break or its internal maps die. But this is hard to engineer: for example, without cameras, such a car cannot see where it is going.

A driverless bus shuttles passengers across Southeast University’s Jiulonghu campus in Nanjing, China.Credit: CVG/Getty

In our view, some form of human intervention will always be required. Driverless cars should be treated much like aircraft, in which the involvement of people is required despite such systems being highly automated. Current testing of autonomous vehicles abides by this principle. Safety drivers are present, even though developers and regulators talk of full automation.

Nonetheless, having people involved poses safety problems. Autonomous cars will always require users to have a minimum level of skill and will never be easy for some members of the public to operate. People with cognitive impairments, say, might find it difficult to operate these technologies and to override controls. Yet this group includes those who would benefit greatly from self-driving vehicles. For example, older adults8, a demographic of increasing importance, have an elevated risk of crashes because cognitive abilities decline with age9,10. Providing mobility for large numbers of elderly people is an impetus for investment in this technology in Japan, for instance.

A remote supervisor could oversee driverless cars as air-traffic controllers do for aircraft. But how many supervisors would be needed to keep networks of such vehicles safe? Stretching human capacity too far can create accidents11. For example, in 1991, an overwhelmed air-traffic controller in Los Angeles, California, mistakenly cleared an aeroplane to land on another. Last year, an overload of patients was blamed for a string of medical errors by doctors in Hong Kong.

Policy gaps

Current and planned legislation fails to address these issues. Exempting developers from safety rules poses risks. And developers are not always required to report system failures or to establish competency standards for vehicle operators. Such exemptions also presume, wrongly, that human involvement will ultimately be unnecessary. Favouring industry over users will erode support for the technology from an already sceptical public.

Present legislation sidesteps the education of consumers. The US acts merely require that users are “informed” about the technology before its use. Standards of competency and regular proficiency testing for users are not mentioned. Without standards, it is hard to tell whether consumer education programmes are adequate. And without testing, the risk of incidents might increase.

Moving forward

We call on policymakers to rethink their approach to regulating autonomous vehicles and to consider the following six points when drafting legislation.

Driverless does not, and should not, mean without a human operator.Regulators and manufacturers must acknowledge, rather, that automation changes the nature of the work that people perform7.

Users need information on how autonomous systems are working.Manufacturers must research the limits and reliability of devices that are crucial for safety, including cameras, lasers and radars. When possible, they should make the data from these devices available to vehicle operators in an understandable form.

Operators must demonstrate competence. Developers, researchers and regulators need to agree proficiency standards for users of autonomous vehicles. Competency should be tested by licensing authorities and should supplement existing driving permits. Users who fall short should have their access to such vehicles limited, just as colour-blind pilots are banned from flying at night.

Regular checks on user competency should be mandatory. Regulators, manufacturers and researchers must determine a suitable time interval between tests, so that proficiency is kept up as cognitive abilities change and technology evolves.

Remote monitoring networks should be established. Manufacturers, researchers and legislators need to build supervisory systems for autonomous vehicles. Researchers should supply guidance on the number of vehicles that one supervisor can monitor safely, and on the conditions under which such monitoring is permissible. For example, more supervisors would be needed in poor weather conditions.

Work limits for remote supervisors should be defined. Experts must clarify whether supervisors should be subject to existing working-time regulations. For example, air-traffic controllers are limited in how long they can work.

The path towards autonomy is far from preordained. Considerable challenges remain to be addressed.